Given that SAP is architected

to support a distributed three-tiered design, a slew of configurations

exist that can potentially impact high availability. There are similar

performance considerations as well, as the database, application, and

Web tier layers are all affected. For example, a back-end network is

recommended to interconnect the database and the application servers,

another network interconnects the application servers to the

Web/Internet layers, and a third public network addresses client

requirements. If you plan on pulling backups across the network (rather

than via a disk

subsystem that supports direct-attached SCSI or fibre channel tape

drives), a separate back-end network subnet is highly recommended in

this case, too.

In all cases, 100Mbit switched network segments

are warranted, if not Gigabit Ethernet. Backups are very

bandwidth-intensive, and if data is transmitted over otherwise crowded

network lines, database disconnects between your application and

database servers may result, for example. In this case more than any

other, therefore, Gigabit is warranted. Beyond network-enabled backups,

the second preferred subnet in which to leverage Gigabit includes the

network connecting the application and database servers, where traffic

can also be quite heavy. This is especially true as more and more

enterprises grow their mySAP environment by adding new SAP components

but insist on leveraging the same backend network.

The application/Web tier often consists of a

single 100Mbit subnet. As the application servers/Web servers are

usually not considered critical from a backup perspective, dedicated

network backup segments are usually not warranted in this case—these

servers usually contain fairly static data that lends itself to weekly

or other less-regular backups.

The Web tier is typically split, though. In the

case of SAP ITS implementations, for instance, an application gateway

(AGATE) connects to the SAP application servers, a Web gateway (WGATE)

connects to the AGATE, and the end users connect to the Web gateway.

Thus, the WGATE is often housed in a secure DMZ for access via the

Internet or an intranet, while the AGATE remains behind a firewall, safe

in its own secure subnet, as you see in Figure 1. In this way, the network accommodates the performance needs of the enterprise without sacrificing security.

Network Fault Tolerance

As I indicated earlier, there are many ways to

architect a network solution for SAP. Simply segregating each layer in

the solution stack achieves minimum performance metrics but does not

address availability. In fact, it actually increases the chance of a

failure, as more and more single-point-of-failure components are

introduced. Fault tolerance must therefore be built

into the design, not looked at afterwards. To this end, I will next

discuss the primary method by which network availability is designed

into the SAP data center, using an approach I call availability through redundancy.

Just as a redundant power infrastructure starts

with the servers, disk subsystems, and other hardware components, so too

does a redundant network infrastructure start with the networked

servers. Here, redundant network interface cards, or NICs, are specified, each cabled to redundant network hubs or switches, which are in turn connected to redundant routers. Figure 2 illustrates this relationship between the servers and a highly available network infrastructure.

Network fault tolerance starts not only with two

(or otherwise redundant) NIC cards, but also with OS-specific drivers

capable of pairing two or more physical network cards into one “virtual”

card. Hardware vendors often refer to this as teaming or pooling,

but other labels have been used for this important piece of the network

puzzle. Regardless of the label, the idea is simple: Pair two or more

network cards together, and use both concurrently for sending and

receiving the same exact packets of data. Then, use the data on one

network card/network segment (referred to as the “primary” NIC), and

discard or ignore the (exact same) data on the other NIC as long as the

network is up and things are going well. If a failure occurs with one of

the NICs, network segments, or hubs/switches on the “redundant” path,

continue processing packets as always on the primary path—business as

usual. However, if a failure occurs with one of the NICs, network

segments, or hubs/switches on the “primary” path, immediately turn to

the redundant path and start processing the data moving through this

path instead.

Today, most every operating system supports NIC

teaming for high availability. Many OSes also support variations of NIC

teaming geared toward improving performance, including network bonding,

adaptive load balancing, FastEtherChannel, Gigabit EtherChannel, and

more. Remember, though, that these latter variations do not necessarily

improve availability; teaming must either be inherently supported by or

used in conjunction with these different performance-enhancing

techniques or approaches.

|

Some operating systems simply do not support

specific network cards when it comes to NIC teaming. Further,

Microsoft’s cluster service specifically prohibits clustering the

private high-availability server interconnect, or heartbeat connection,

regardless of the type of NIC—Microsoft simply does not support teaming

the heartbeat. Network availability in this case is gained by

configuring the cluster service such that both the private and public

networks can send “still alive” messages between the cluster nodes. As

an aside, best practices suggest that the private network be preferred

for this activity, and that the public network only be leveraged when

the private network fails or is otherwise unavailable.

|

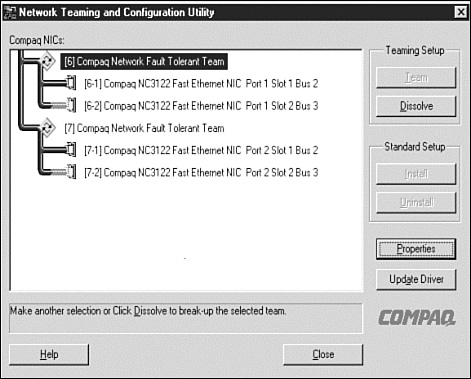

Let us return to our primary discussion on

designing and implementing highly available networks for SAP. Remember,

each NIC in the team should be connected to a different switch that in

turn is connected to a separate router or separate card in a highly

available router configuration (as required). Each redundant switch,

router, and any other network gear must then be serviced by redundant

power to indeed create an NSPOF, or no

single point of failure, configuration. Insofar as best practices are

concerned, I recommend that you also adhere to the following guidelines:

All network interfaces must be

hard-coded or set to a specific speed, for example, 100Mbit Full Duplex.

Refrain from using the “auto configure” function, regardless of how

tempting it is to avoid three or four mouse clicks per server to

hard-code each NIC setting specifically. This is especially a problem if

ignored in clusters or in network environments characterized by

switches and hubs servicing different network segments running at

different speeds. Using the “auto configure” setting can easily mask

network problems, including intermittently failing network cards, and

ultimately add hours or even days to troubleshooting cluster issues.

Keep

in mind that NIC teaming is implemented via a software/driver-level

function. Thus, anytime one or more NICs participating in a team are

replaced, swapped out, or reconfigured, the team should be dissolved and

reconfigured again. Failure to do so could create issues difficult to

troubleshoot, including intermittent or unusual operation of the NIC

team.

Most servers manufactured in the

last 10 years take advantage of multiple system busses. Each bus is

normally capable of achieving a certain maximum throughput number. For a

64-bit 66MHz PCI bus, this number is something approaching 528

MB/second. To achieve even greater throughput, then, NICs need to reside

in different busses. Taken one step further, the idea of multiple

busses should also get you thinking about high availability. Remember,

an NSPOF solution theoretically relies on redundant components

everywhere, even inside the servers. So, multiple PCI busses fit the

bill—as an added level of fault tolerance, place the NICs in different

PCI busses.

Finally, if multiple NIC

teams are configured (for example, in the case of SAP Application

servers, where redundant connections are preferred to both the database

server and the public network or Internet/Web intranet layer), it is

important to ensure that the MAC address of the first team represents

the primary address of the server. The primary address of the server

thus maps back to its name on the public network. This sounds a bit

complicated, but hopefully makes sense in Figure 3.

Here, Network Fault Tolerant Team #6 represents the public team.

Further, the MAC address of NIC #1 of this team is the MAC address

“seen” by other servers. In this way, both name resolution and failover

work as expected.

Central Systems and Optimal Network Configuration

Network

configurations for SAP are also impacted by the type of server deployed

in the system landscape. All of our attention thus far has been focused

on three-tiered architectures, where the database, application, and

client components of SAP are broken out into three different

servers/hardware platforms. However, it is also quite common to deploy

two-tiered SAP systems, or “Central Systems,” especially when it comes

to small training systems or uncomplicated testing environments. In this

case, the first question a network engineer new to SAP asks is “Do I

cable the system to the public network, or the back-end database

network?”

The question is valid. And because each option

seems to make sense, there has been debate in the past on this very

topic, to the point where SAP finally published an excellent paper on

network recommendations for different system landscapes. Let’s assume

that our Central System is an R/3 Development server, and take a closer

look at each option in an effort to arrive at the best answer.

If our new network engineer cables the Central

System to the back-end network (a network segment upon which other

three-tiered SAP database servers reside), the following points are

true:

Given that the Central System is also a database server, the database resides where it is “supposed to”—on the back-end network.

Any

traffic driven by the DB server thus inherently remains on the back end

as well. For example, if the SAP BW Extractors are loaded on this

system, all of the network traffic associated with pulling data from our

R/3 system to populate BW cubes stays off the public network. Again,

our network traffic remains where it is supposed to.

Any other DB-to-DB traffic, like transports, mass updates or data loads, and so on, also stays on the back-end.

However,

because our server is not on the public network, it is not as easily

accessed by users and administrators. That is, name resolution becomes a

challenge.

Further, because the back-end

network tends to be much busier than typical public networks (which

from an SAP perspective only service low-bandwidth SAPGUI and print job

traffic most of the time), our end-user response times will vary wildly,

suffering across the board.

These are interesting points, but imagine how

complex the issue becomes if your Central System now grows into an SAP

cluster. In this case, because a cluster is implemented as a

three-tiered configuration, you might be inclined to hang one node (the

database node) off the private network, and the Central

Instance/Application Server Node off the public network. But what

happens when the second cluster node fails over and the DB node becomes

the CI/Application server as well as retaining its DB Server role? Or

vice versa, and the CI/Application server takes on the role of the

database server, too?

By now, you should understand that the best

network solution in this case is not an either/or answer. Rather, the

best solution is predicated on accessibility to both the private and

public networks. This is accomplished by adding another network card

into the server, NIC #2, and setting it up for connectivity to the

back-end network. Meanwhile, the original NIC (now labeled NIC #1) is

moved to the public network, where it is assigned a public IP address.

Name resolution and other public network-related services regarding

accessibility are now easily addressed. And by using static routing, all

DB-to-DB traffic is retained on the back-end network segment as

desired. Best of all, the cost to achieve this solution is minimal—a

second NIC, another IP address, and a few hours of time are really all

that is required.

To verify that your static routing indeed

operates as it should after SAP has been installed, launch a SAPGUI

session, log in with typical SAP Basis rights and privileges, and follow

these steps:

1. | Execute transaction /nSMLG.

|

2. | Press F6 to go to the Message Server Status Area.

|

3. | Verify

that your PUBLIC subnet (under “MSGSERVER”) is listed under the Logon

Group Name. If this is not the case, return to the previous screen and

under the Instance column, double-click each application server, and

then click the Attributes tab.

|

4. | In the IP address field, enter the associated PUBLIC subnet for each server, and then click the Copy button.

|

5. | Verify

these settings again, by highlighting the application server and

pressing the F6 key—the logon group will list the PUBLIC subnet.

|

By following these steps, you can in essence verify that the traffic associated with end users stays on the public

network, thus ensuring that their response times will be as fast as

possible—certainly faster than if the end-user traffic was routed over

the back-end network.

With your highly

available and optimized network infrastructure installed, you can now

turn to the next few layers in the SAP Solution Stack—the network server

and the server operating system.